Artificial intelligence is evolving rapidly, and one key advancement is the shift in how AI agents interact with tools, driven by the revolutionary Model Context Protocol (MCP). MCP makes use of context-based connections of tools in centralized tool servers, so that intelligent agents can use them; this makes it more scalable, flexible, and has the highest degree of precision in execution.

This blog demonstrates how the agents that are built into Model Context Protocol use a centralized server to route and perform tasks more intelligently and pave the way towards smarter and more adaptive AI.

Keytake aways:

- MCP enables AI agents to choose tools dynamically based on user context.

- Centralized tool servers replace local tool dependencies for better scalability.

- Agents become modular, context-aware, and adaptable in real time.

- Used in real-world cases like customer support, dev copilots, BI assistants, and automation.

- Benefits include flexible tool usage, scalable deployments, smarter task execution, and easy maintenance.

- Challenges include latency, authentication, and failover management.

- Future involves multi-agent systems sharing toolkits and open-source integration.

Table of Contents

- What Is a Model Context Protocol-Based Agent?

- How Centralized Tool Servers Work in AI Systems

- How the Model Context Protocol Enables Tool Routing for Agents

- Real-World Use Cases of MCP-Based AI Agents

- Why Model Context Protocol-Based Agents Are the Future

- Challenges in Centralized Tool Routing

- The Road Ahead: Multi-Agent Systems and Centralized Tool Ecosystems

- Conclusion: Smarter AI Starts with Model Context Protocol and Centralized Tools

What Is a Model Context Protocol-Based Agent?

A Model Context Protocol (MCP) based agent is a smart tool to select and access which tool to use based on the scenario in the user context. These agents don’t have prebuilt capabilities. Instead, they rely on centralized tool servers to dynamically select and route tools based on context.

This is because it allows them to:

- Choose appropriate tools at run-time

- Avoid local tool bloat

- Optimize real-time throughout activities

This shift marks the foundation of modular, context-aware AI agents capable of selecting the right tools at the right time.

How Centralized Tool Servers Work in AI Systems

A centralized tool server is a unified infrastructure that hosts multiple APIs, microservices, and utilities that agents can access as needed.

| Feature | Traditional AI Agents | MCP-Based AI Agents |

| Tool Access | Local tools only | Centralized tool servers |

| Context Awareness | Minimal | High (via Model Context Protocol) |

| Tool Management | Manual updates | Centralized & dynamic |

| Scalability | Limited | Modular & extensible |

| Tool Routing | Not dynamic | Smart tool routing for agents |

How the Model Context Protocol Enables Tool Routing for Agents

The Model Context Protocol plays a critical role in enabling intelligent tool routing for AI agents. This is how this works:

The agent is triggered by user input.

- The agent examines context and purpose.

- This is what it asks the centralized tool server.

- The most relevant tool or service is returned by the server.

- The agent then executes the task using the selected tool and delivers the response to the user.

Such routing makes sure that AI agents employ the appropriate tool at the appropriate time, focusing on one of the keys to modular AI architecture.

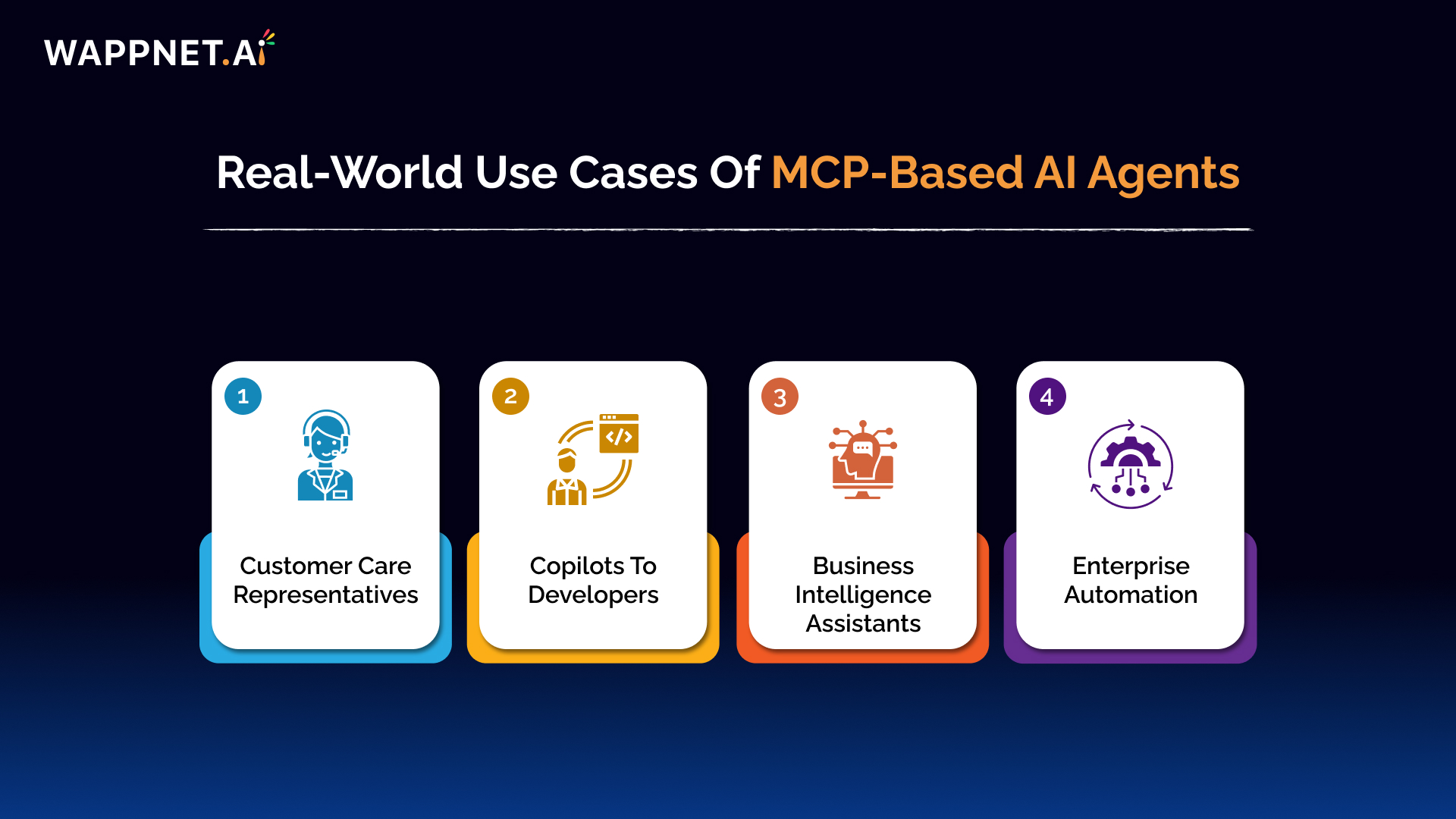

Real-World Use Cases of MCP-Based AI Agents

MCP-based agents perform well in a situation where modularity and adaptability of tools are critical:

Customer Care Representatives

Take advantage of real-time tool routing to escalate, translate, or pull in help articles.

Copilots to developers

Access dynamically debugging tools, code generation tools, or refactoring tools using centralized servers.

Business Intelligence Assistants

They can instantly pull in reporting, visualization, or analytics tools on demand.

Enterprise Automation

Activate task-specific RPA or workflow tools through one common point called the tool server.

Such examples depict that there is an increased emphasis on AI agents that have tools to use in smart routing as per model context protocols.

Why Model Context Protocol-Based Agents Are the Future

Benefits of This Architecture:

- Tool Flexibility: The logic of agents is independent of a change or replacement of the tools.

- Scalable AI Deployment: You can deploy new tools on the central agent, and agents can access them immediately.

- Smart Task Handling: The capacity to act on multifaceted and multiphase tasks is improved by the provision of relevant instruments access in context.

- Easy Maintenance: Elimination of the need to send updates to every agent separately.

This architecture is ideal for organizations aiming to deploy scalable AI agents that can adapt across departments, domains, and use cases.

Challenges in Centralized Tool Routing

Although this is an effective technique, it is not free of complexity:

- Latency: Centralized calls have to be highly fast.

- Auth: Authentication is essential to enter the server safely.

- Failover Design: Backup is necessary, in case a tool malfunctions.

Tool use by agents needs to become reliable and hitch-free through proper infrastructure, caching, and API management.

The Road Ahead: Multi-Agent Systems and Centralized Tool Ecosystems

AI systems with centralized tool servers and agent context model-based protocols look bright in the future. We are on the way to such settings in which:

- There can be several agents that are connected through the use of the shared toolkits

Agents are constantly being updated with feature additions/removals without causing downtime. - An open-source tools marketplace can seamlessly integrate with MCP-based agents, enabling broader functionality.

This is a precise change in the developer patterns of LangChain and AutoGPT technology and agentic AI systems.

Conclusion: Smarter AI Starts with Model Context Protocol and Centralized Tools

Model Context Protocol, agent tool routing, and centralized tool servers form the three pillars of next-generation AI architecture. This architecture provides the agility and intelligence you need in the current AI world, whether you are developing customer service robotics or even code assistants, or enterprise agents.

Related Post